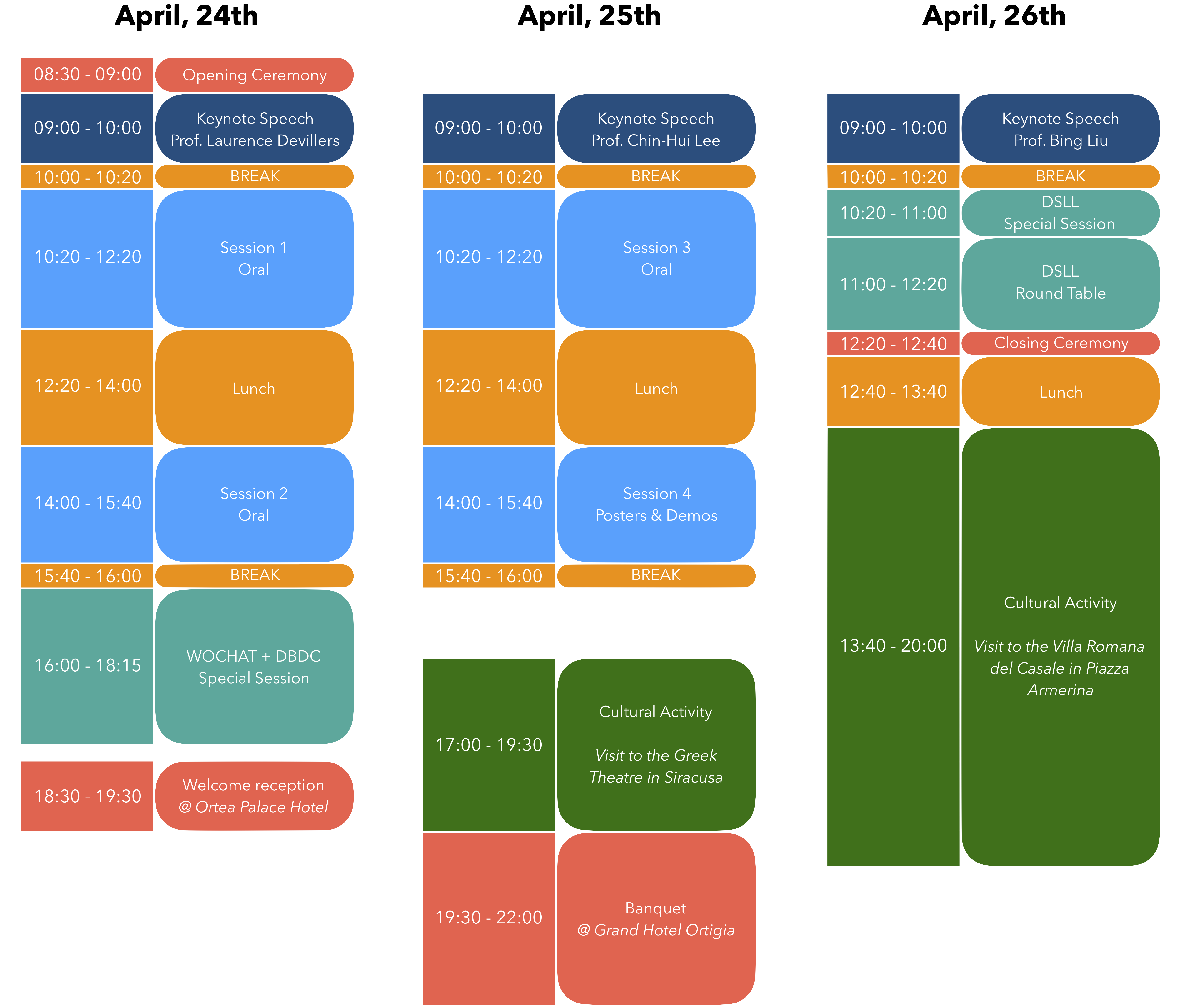

April, 24th

Keynote 1

09:00 – 10:00

Prof. Laurence Devillers

Affective robots and Ethics

Talk during social interactions naturally involves the exchange of propositional content but also and perhaps more importantly the expression of interpersonal relationships, as well as displays of emotion, affect, interest, etc. in order to provide a companion-machine (robot, chatbot or ECA) with the skills to create and maintain a long term social relationship through verbal and non verbal language interaction. Affective robots and conversational agents like Google Home bring a new dimension to interaction and could become a mean of influencing individuals. “Nudges” as exhibited by robotic, intelligent or autonomous systems are defined as overt or hidden suggestions or manipulations designed to influence the behavior or emotions of a user. They are currently neither regulated nor evaluated and very obscure. Such social and affective interaction requires that the robot has the ability to represent and understand some complex human social behavior. It is necessary that a bigger ethical thought is combined with the scientific and technological development of robots, to ensure the harmony and acceptability of their relation with the human beings. The ethical issues, including safety, privacy, and dependability of robot behaviour, are also more and more widely discussed. The talk will review these challenges.

Prof. Laurence Devillers received her PhD degree in Computer Science from University Paris-Orsay, France, in 1992 and her HDR (habilitation dissertation) in Computer Science in 2006, “Emotion in interaction: Perception, detection and generation” from University Paris-Orsay, France. Laurence Devillers is a full Professor of Computer Sciences and Artificial Intelligence at Sorbonne University/ CNRS (LIMSI lab., Orsay) on affective Robotics, Spoken dialog, Machine learning, and Ethics. She is the author of more than 150 scientific publications (h-index: 35). In 2017, she wrote the book “Des Robots et des Hommes: mythes, fantasmes et réalité” (Plon, 2017) for explaining the urgence of building Social and Affective Robotic Systems with Ethics by design. The new AI and Robotics applications in domains such as healthcare or education must be introduced in ways that build trust and understanding, and respect human and civil rights. The issue of the Ethics of AI and robotics is an essential topic for Economy and for Society. Since 2014, she is member of the French Commission on the Ethics of Research in Digital Sciences and Technologies (CERNA) of Allistène and participated to several reports on Research Ethics on Robotics (2014) and Research Ethics on Machine learning. Since 2016, she is involved in “The IEEE Global Initiative for Ethical Considerations in the Design of Autonomous Systems” and head the 7008 working group on “Standard for Ethically Driven Nudging for Robotic, Intelligent and Autonomous Systems”. She is also involved in the Data IA institut (Orsay) and the French national HUB IA.

Session 1 – Oral

Chairs: Joseph Mariani & Odette Scharenborg

10:20 – 10:40

Stefan Constantin, Jan Niehues and Alex Waibel

Multi-task learning to improve natural language understanding

10:40 – 11:00

David Griol, Zoraida Callejas and Jose F. Quesada

Managing Multi-task Dialogs by means of a Statistical Dialog Management Technique

11:00 – 11:20

Kenji Iwata, Takami Yoshida, Hiroshi Fujimura and Masami Akamine

Transfer Learning for Unseen Slots in End-to-end Dialogue State Tracking

11:20 – 11:40

Koki Tanaka, Koji Inoue, Shizuka Nakamura, Katsuya Takanashi and Tatsuya Kawahara

End-to-end modeling for selection of utterance constructional units via system internal states

11:40 – 12:00

Jeremy Auguste, Frederic Bechet, Géraldine Damnati and Delphine Charlet

Skip Act Vectors: integrating dialogue context into sentence embeddings

12:00 – 12:20

Vladislav Maraev, Christine Howes and Jean-Philippe Bernardy

Predicting laughter relevance spaces in dialogue

Session 2 – Oral

Chairs: Kristiina Jokinen

& Géraldine Damnati

14:00 – 14:20

Koh Mitsuda, Ryuichiro Higashinaka, Taichi Katayama and Junji Tomita

Generating supportive utterances for open-domain argumentative dialogue systems

14:20 – 14:40

Michael Barz and Daniel Sonntag

Incremental Improvement of a Question Answering System by Re-ranking Answer Candidates

14:40 – 15:00

Xingkun Liu, Arash Eshghi, Pawel Swietojanski and Verena Rieser

Benchmarking Natural Language Understanding Services for building Conversational Agents

15:00 – 15:20

Ryuichiro Higashinaka, Kotaro Funakoshi, Michimasa Inaba, Yuiko Tsunomori, Tetsuro Takahashi and Reina Akama

Dialogue system live competition: identifying problems with dialogue systems through live event

15:20 – 15:40

Odette Scharenborg and Mark Hasegawa-Johnson

Position Paper: Brain Signal-based Dialogue Systems

WOCHAT + DBDC Special Session

16:00 – 16:15

Introduction to WOCHAT + DBDC

16:15 – 16:30

Ibrahim Taha Aksu, Rafael Banchs, Nancy Chen and Luis Fernando D’Haro

Reranking of responses using transfer learning for a retrieval-based chatbot

16:30 – 16:45

Thi Ly Vu, Kyaw Zin Tun and Rafael Banchs

Online FAQ Chatbot for Customer Support

16:45 – 17:00

Kazuaki Furumai, Tetsuya Takiguchi and Yasuo Ariki

Generation of Objections Using Topic and Claim Information in Debate Dialogue System

17:00 – 17:15

Hiromi Narimatsu, Ryuichiro Higashinaka, Hiroaki Sugiyama, Masahiro Mizukami and Tsunehiro Arimoto

Analyzing How a Talk Show Host Performs Follow-Up Questions for Developing an Interview Agent

17:15 – 17:25

Emer Gilmartin

What’s Chat and Where to Find it

17:25 – 17:35

DBDC Shared-task overview presentation

Ryuichiro Higashinaka, Luis F. D’Haro, Bayan Abu Shawar, Rafael Banchs, Kotaro Funakoshi, Michimasa Inaba, Yuiko Tsunomori, Tetsuro Takahashi, and Joao Sedoc

Overview of the dialogue breakdown detection challenge 4

17:35 – 17:45

DBDC Sponsor presentations

17:45 – 18:15

DBDC Poster Session

JongHo Shin, Alireza Dirafzoon, and Aviral Anshu

Context-enriched Attentive Memory Network with Global and Local Encoding for Dialogue Breakdown Detection

Hiroaki Sugiyama

Dialogue breakdown detection using BERT with traditional dialogue features

Mariya Hendriksen, Artuur Leeuwenberg, and Marie-Francine Moens

LSTM for Dialogue Breakdown Detection: Exploration of Different Model Types and Word Embeddings

Chih-hao Wang and Sosuke Kato and Tetsuya Sakai

RSL19BD at DBDC4: Ensemble of Decision Tree-based and LSTM-based Models

April, 25th

Keynote 2

09:00 – 10:00

Prof. Chin-Hui Lee

Goal-Driven Multi-Turn Dialogue Processing: from Call Routing Search to Entropy Minimization Dialogue Management (EMDM)

For many goal-driven tasks, dialog processing is often confined to searching for a particular goal in a set. Each individual goal can be reached as long as a subset or all of the attributes corresponding to the goal can be clearly specified. Some attributes are associated to multiple goals, and some goals can be defined with just a small number of attributes. To efficiently reaching a user’s goal in a small number of turns, we first describe a call routing system that resolves goal ambiguity by formulating disambiguation questions that are more likely to lead to a smaller subset of potential destinations after each system-user interaction. Next, we cast such goal-driven task as a multi-turn search problem present a probabilistic dialog representation using dynamically evolving states consisting of a system-initiated question and a user’s reply. Finally, we show that a goal can be reached in the smallest number of turns if the system asks questions related to the attribute with the maximum entropy. We experiment on a music search task with 38,177 songs and 12 attributes, such as album, singer, style, etc. The EMDM strategy always outperforms other state-of-the-art approaches with the smallest number of turns in reaching the user’s goals. Speech recognition and language understanding errors can also be accommodated by expanding the dynamic dialog states that also include multiple candidates from potential errors.

Chin-Hui Lee is a professor at School of Electrical and Computer Engineering, Georgia Institute of Technology. Before joining academia in 2001, he had accumulated 20 years of industrial experience ending in Bell Laboratories, Murray Hill, as a Distinguished Member of Technical Staff and Director of the Dialogue Systems Research Department. Dr. Lee is a Fellow of the IEEE and a Fellow of ISCA. He has published over 500 papers and 30 patents, with more than 42,000 citations and an h-index of 80 on Google Scholar. He received numerous awards, including the Bell Labs President’s Gold Award in 1998. He won the SPS’s 2006 Technical Achievement Award for “Exceptional Contributions to the Field of Automatic Speech Recognition”. In 2012 he gave an ICASSP plenary talk on the future of automatic speech recognition. In the same year he was awarded the ISCA Medal in scientific achievement for “pioneering and seminal contributions to the principles and practice of automatic speech and speaker recognition”.

Session 3 – Oral

Chairs: Satoshi Nakamura

& Frederic Bechet

10:20 – 10:40

Koji Inoue, Divesh Lala, Kenta Yamamoto, Katsuya Takanashi and Tatsuya Kawahara

Engagement-based adaptive behaviors for laboratory guide in human-robot dialogue

10:40 – 11:00

Bernd Kiefer, Anna Welker and Christophe Biwer

VOnDA: A Framework for Ontology-Based Dialogue Management

11:00 – 11:20

Yuiko Tsunomori, Ryuichiro Higashinaka, Takeshi Yoshimura and Yoshinori Isoda

Chat-oriented dialogue system that uses user information acquired through dialogue and its long-term evaluation

11:20 – 11:40

Kazunori Komatani, Shogo Okada, Haruto Nishimoto, Masahiro Araki and Mikio Nakano

Multimodal Dialogue Data Collection and Analysis of Annotation Disagreement

11:40 – 12:00

Annalena Aicher, Niklas Rach and Wolfgang Minker

Opinion Building based on the Argumentative Dialogue System BEA

12:00 – 12:20

Nicolas Wagner, Matthias Kraus, Niklas Rach and Wolfgang Minker

How to address humans: System barge-in in multi-user HRI

Session 4 – Posters & Demos

Chairs: Sandro Cumani

& Erik Marchi

14:00 – 15:40

Ryo Ishii, Taichi Katayama, Ryuichiro Higashinaka and Junji Tomita

Automatic Head-Nod Generation using Utterance Text considering Personality Traits

Svetlana Stoyanchev and Badrinath Jayakumar

Context Aware Dialog Management with Unsupervised Ranking

Asier López Zorrilla, Mikel Develasco Vázquez and Maria Inés Torres

A Differentiable Generative Adversarial Network for Open Domain Dialogue

Felix Gervits, Anton Leuski, Claire Bonial, Carla Gordon and David Traum

A Classification-Based Approach to Automating Human-Robot Dialogue

Graham Wilcock and Kristiina Jokinen

Towards increasing naturalness and flexibility in human-robot dialogue systems

Hayato Katayama, Shinya Fujie and Tetsunori Kobayashi

End-to-middle training based action generation for multi-party conversation robot

Maulik Madhavi, Tong Zhan, Haizhou Li and Min Yuan

First Leap Towards Development of Dialogue System for Autonomous Bus

Koji Inoue, Kohei Hara, Divesh Lala, Shizuka Nakamura, Katsuya Takanashi and Tatsuya Kawahara

A job interview dialogue system with autonomous android ERICA

Koichiro Yoshino, Yukitoshi Murase, Nurul Lubis, Kyoshiro Sugiyama, Hiroki Tanaka, Sakriani Sakti, Shinnosuke Takamichi and Satoshi Nakamura

Spoken Dialogue Robot for Watching Daily Life of Elderly People

April, 26th

Keynote 3

09:00 – 10:00

Prof. Bing Liu

Continuous Knowledge Learning in Chatbots

Dialogue and question-answering (QA) systems (we call chatbots) are increasingly used in practice for many applications. However, existing chatbots either do not use an explicit knowledge base or use a fixed knowledge base that does not expand after deployment, which limits the scope of their applications. To make chatbots more intelligent, they need to learn new knowledge continuously in online chatting and in offline processing. We humans learn a great deal of knowledge in our interactive conversations about the world around us and about our conversation partners. Chatbots should also have this capability. In this talk, I will first give an introduction to continuous or lifelong learning, and then discuss a work towards building a continuous knowledge learning engine for chatbots.

Bing Liu is a distinguished professor of Computer Science at the University of Illinois at Chicago (UIC). He received his Ph.D. in Artificial Intelligence from University of Edinburgh. Before joining UIC, he was a faculty member at the School of Computing, National University of Singapore. His research interests include lifelong learning, sentiment analysis, chatbot, natural language processing (NLP), data mining, and machine learning. He has published extensively in top conferences and journals. Three of his papers have received Test-of-Time awards: two from SIGKDD (ACM Special Interest Group on Knowledge Discovery and Data Mining), and one from WSDM (ACM International Conference on Web Search and Data Mining). He is also a recipient of ACM SIGKDD Innovation Award, the most prestigious technical award from SIGKDD. He has authored four books: two on sentiment analysis, one on lifelong learning, and one on Web mining. Some of his work has been widely reported in the international press, including a front-page article in the New York Times. On professional services, he has served as program chair of many leading data mining conferences, including KDD, ICDM, CIKM, WSDM, SDM, and PAKDD, as associate editor of many leading journals such as TKDE, TWEB, DMKD and TKDD, and as area chair or senior PC member of numerous NLP, AI, Web, and data mining conferences. Additionally, he served as the Chair of ACM SIGKDD from 2013-2017. He is a Fellow of the ACM, AAAI, and IEEE.

DSLL Special Session

10:20 – 10:40

Maria Di Maro, Antonio Origlia and Francesco Cutugno

Learning between the Lines: Interactive Learning Modules within Corpus Design

10:40 – 11:00

Claudio Greco, Barbara Plank, Raquel Fernández and Raffaella Bernardi

Measuring Catastrophic Forgetting in Visual Question Answering

DSLL Round Table

11:00 – 12:20

Eneko Agirre, Anders Jonsson and Anthony Larcher

Framing Lifelong Learning as Autonomous Deployment: Tune Once Live Forever

Anselmo Peñas, Mathilde Veron, Camille Pradel, Arantxa Otegi, Guillermo Echegoyen and Alvaro Rodrigo

Continuous Learning for Question Answering

Don Perlis, Clifford Bakalian, Justin Brody, Timothy Clausner, Matthew Goldberg, Adam Hamlin, Vincent Hsiao, Darsana Josyula, Chris Maxey, Seth Rabin, David Sekora, Jared Shamwell and Jesse Silverberg

Live and Learn, Ask and Tell: Agents Over Tasks

Mathilde Veron, Sahar Ghannay, Anne-Laure Ligozat and Sophie Rosset

Lifelong learning and task-oriented dialogue system: what does it mean?

Mark Cieliebak, Jan Deriu and Olivier Galibert

Towards Understanding Lifelong Learning for Dialogue Systems